Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

All Apps and Add-ons

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Re: Why is the Kafka Messaging Modular Input not w...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why is the Kafka Messaging Modular Input not working on a Splunk 6.2.3 Universal Forwarder?

tpaulsen

Contributor

06-08-2015

02:01 AM

Hello,

I am currently trying to make Damien's Kafka Modular Input run on a Splunk Universal Forwarder 6.2.3 under RedHat 6.

Unfortunately i am getting the following errors in the splunkd.log:

06-02-2015 09:33:38.282 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

06-02-2015 09:33:38.282 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" SLF4J: Defaulting to no-operation (NOP) logger implementation

06-02-2015 09:33:38.282 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

We are running Python 2.6 on the host. Recommended is Python 2.7 version. Could this be the root cause?

Here is our inputs.conf:

# Kafka Data Inputs {{{

[kafka://kafka-hostname]

additional_consumer_properties = group.id=7

auto_commit_interval_ms = 1000

group_id = 6

index = test

sourcetype = kafka-test

topic_name = logstash

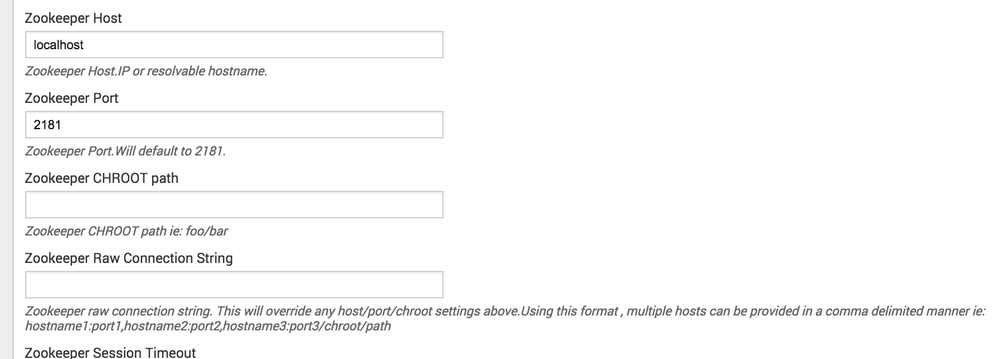

zookeeper_connect_host = zoo-hostname1,zoo-hostname2,zoo-hostname3,zoo-hostname4,zoo-hostname5

zookeeper_connect_port = 2181

zookeeper_session_timeout_ms = 400

zookeeper_sync_time_ms = 200

disabled = false

# }}}

When i use only one zoo-host and leave the following lines out:

additional_consumer_properties = group.id=7

auto_commit_interval_ms = 1000

group_id = 6

i get different error messages in the splunkd.log:

06-02-2015 11:06:15.242 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.consumer.ZookeeperConsumerConnector.kafka$consumer$ZookeeperConsumerConnector$$registerConsumerInZK(ZookeeperConsumerConnector.scala:226)

06-02-2015 11:06:15.242 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.consumer.ZookeeperConsumerConnector.consume(ZookeeperConsumerConnector.scala:211)

06-02-2015 11:06:15.242 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.javaapi.consumer.ZookeeperConsumerConnector.createMessageStreams(ZookeeperConsumerConnector.scala:80)

06-02-2015 11:06:15.242 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.javaapi.consumer.ZookeeperConsumerConnector.createMessageStreams(ZookeeperConsumerConnector.scala:92)

06-02-2015 11:06:15.243 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at com.splunk.modinput.kafka.KafkaModularInput$MessageReceiver.run(Unknown Source)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" Stanza kafka://kafka-dev-290687.lhotse.ov.otto.de : Error running message receiver : java.lang.IllegalArgumentException: Invalid path string "/consumers//ids/_kafka-dev-290687-1433235975245-76b9eb2e" caused by empty node name specified @11

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.apache.zookeeper.common.PathUtils.validatePath(PathUtils.java:99)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.apache.zookeeper.common.PathUtils.validatePath(PathUtils.java:35)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.apache.zookeeper.ZooKeeper.create(ZooKeeper.java:626)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.I0Itec.zkclient.ZkConnection.create(ZkConnection.java:87)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.I0Itec.zkclient.ZkClient$1.call(ZkClient.java:308)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.I0Itec.zkclient.ZkClient$1.call(ZkClient.java:304)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.I0Itec.zkclient.ZkClient.retryUntilConnected(ZkClient.java:675)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.I0Itec.zkclient.ZkClient.create(ZkClient.java:304)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at org.I0Itec.zkclient.ZkClient.createEphemeral(ZkClient.java:328)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.utils.ZkUtils$.createEphemeralPath(ZkUtils.scala:253)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.utils.ZkUtils$.createEphemeralPathExpectConflict(ZkUtils.scala:268)

06-02-2015 11:06:15.248 +0200 ERROR ExecProcessor - message from "python /var/opt/splunk/etc/apps/kafka_ta/bin/kafka.py" at kafka.utils.ZkUtils$.createEphemeralPathExpectConflictHandleZKBug(ZkUtils.scala:306)

What am i doing wrong in my configuration?

Please help.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Damien_Dallimor

Ultra Champion

09-04-2015

03:02 AM

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Damien_Dallimor

Ultra Champion

06-10-2015

08:38 AM

Do you get the error if you try with a single zookeeper host ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tpaulsen

Contributor

09-04-2015

01:57 AM

Not yet. My attempts got stopped by some other projectwork, involving Kafka and ELK. 😞

Get Updates on the Splunk Community!

Get the T-shirt to Prove You Survived Splunk University Bootcamp

As if Splunk University, in Las Vegas, in-person, with three days of bootcamps and labs weren’t enough, now ...

Introducing the Splunk Community Dashboard Challenge!

Welcome to Splunk Community Dashboard Challenge! This is your chance to showcase your skills in creating ...

Wondering How to Build Resiliency in the Cloud?

IT leaders are choosing Splunk Cloud as an ideal cloud transformation platform to drive business resilience, ...