- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- extract field from json file

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

extract field from json file

Hello

I have this query :

index="github_runners" sourcetype="testing" source="reports-tests"

| spath path=libraryPath output=library

| spath path=result.69991058{} output=testResult

| mvexpand testResult

| spath input=testResult path=fullName output=test_name

| spath input=testResult path=success output=test_outcome

| spath input=testResult path=skipped output=test_skipped

| spath input=testResult path=time output=test_time

| table library testResult test_name test_outcome test_skipped test_time

| eval status=if(test_outcome="true", "Passed", if(test_outcome="false", "Failed", if(test_skipped="true", "NotExecuted", "")))

| stats count sum(eval(if(status="Passed", 1, 0))) as passed_tests, sum(eval(if(status="Failed", 1, 0))) as failed_tests , sum(eval(if(status="NotExecuted", 1, 0))) as test_skipped by test_name library test_time

| eval total_tests = passed_tests + failed_tests

| eval success_ratio=round((passed_tests/total_tests)*100,2)

| table library, test_name, total_tests, passed_tests, failed_tests, test_skipped, success_ratio test_time

| sort + success_ratioAnd i'm trying to make its dynamic so i will see results for other numbers than '69991058'

How can i make it ?

i'm trying with regex but it looks like im doing something wrong since im getting 0 results while in the first query there are results

index="github_runners" sourcetype="testing" source="reports-tests"

| spath path=libraryPath output=library

| rex field=_raw "result\.(?<number>\d+)\{\}"

| spath path="result.number{}" output=testResult

| mvexpand testResult

| spath input=testResult path=fullName output=test_name

| spath input=testResult path=success output=test_outcome

| spath input=testResult path=skipped output=test_skipped

| spath input=testResult path=time output=test_time

| table library testResult test_name test_outcome test_skipped test_time

| eval status=if(test_outcome="true", "Passed", if(test_outcome="false", "Failed", if(test_skipped="true", "NotExecuted", "")))

| stats count sum(eval(if(status="Passed", 1, 0))) as passed_tests, sum(eval(if(status="Failed", 1, 0))) as failed_tests , sum(eval(if(status="NotExecuted", 1, 0))) as test_skipped by test_name library test_time

| eval total_tests = passed_tests + failed_tests

| eval success_ratio=round((passed_tests/total_tests)*100,2)

| table library, test_name, total_tests, passed_tests, failed_tests, test_skipped, success_ratio test_time

| sort + success_ratio- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for posting mock data emulation. Obviously the app developers do not implement self-evident semantics and should be cursed. (Not just for Splunk's sake, but for every other developer's sanity.) If you have any influence on developers, demand that they change JSON structure to something like

{

"browser_id": "0123456",

"browsers": {

"fullName": "blahblah",

"name": "blahblah",

"state": 0,

"lastResult": {

"success": 1,

"failed": 2,

"skipped": 3,

"total": 4,

"totalTime": 5,

"netTime": 6,

"error": true,

"disconnected": true

},

"launchId": 7

},

"result": [

{

"id": 8,

"description": "blahblah",

"suite": [

"blahblah",

"blahblah"

],

"fullName": "blahblah",

"success": true,

"skipped": true,

"time": 9,

"log": [

"blahblah",

"blahblah"

]

}

],

"summary": {

"success": 10,

"failed": 11,

"error": true,

"disconnected": true,

"exitCode": 12

}

}

That is, isolate the browser_id field into a unique key for browser, results, and summary. The structure you shared cannot express any more semantic browser_id than one. But if for some bizarre reason the browser_id needs to be passed along in results because summary is not associated with browser_id in each event, expressly say so with JSON key, like

{

"browsers": {

"id": "0123456",

"fullName": "blahblah",

"name": "blahblah",

"state": 0,

"lastResult": {

"success": 1,

"failed": 2,

"skipped": 3,

"total": 4,

"totalTime": 5,

"netTime": 6,

"error": true,

"disconnected": true

},

"launchId": 7

},

"result": {

"id": "0123456",

"output": [

{

"id": 8,

"description": "blahblah",

"suite": [

"blahblah",

"blahblah"

],

"fullName": "blahblah",

"success": true,

"skipped": true,

"time": 9,

"log": [

"blahblah",

"blahblah"

]

}

]

},

"summary": {

"success": 10,

"failed": 11,

"error": true,

"disconnected": true,

"exitCode": 12

}

}

Embedding data in JSON key is the worst use of JSON - or any structured data. (I mean, I recently lamented worse offenders, but imaging embedding data in column name in SQL! The developer will be cursed by the entire world.)

This said, if your developer has a gun over your head, or they are from a third party that you have no control over, you can SANitize their data, i.e., making the structure saner using SPL. But remember: A bad structure is bad not because a programming language has difficulty. A bad structure is bad because downstream developers cannot determine the actual semantics without reading their original manual. Do you have their manual to understand what each structure means? If not, you are very likely to misrepresent their intention, therefore get the wrong result.

Caveat: As we are speaking semantics, I want to point out that your illustration uses the plural "browsers" as key name as well as singular "result" as another key name, yet the value of (plural) "browsers" is not an array, while the value of (singular) "result" is an array. If this is not the true structure, you have changed semantics your developers intended. The following may lead to wrong output.

Secondly, your illustrated data has level 1 key of "0123456" in browsers, an identical level 1 key of "0123456" in result, a matching level 2 id in browsers "0123456", a different level 2 id in result "8". I assume that all matching numbers are semantically identical and non-matching numbers are semantically different

Here, I will give you SPL to interpret their intention as my first illustration, i.e., a single browser_id applies to the entire event. I will assume that you have Splunk 9 or above so fromjson works. (This can be solved using spath with a slightly more cumbersome quotation manipulation.)

Here is the code to detangle the semantic madness. This code does not require the first line, fields _raw. But doing so can help eliminate distractions.

| fields _raw ``` to eliminate unusable fields from bad structure ```

| fromjson _raw

| eval browser_id = json_keys(browsers), result_id = json_keys(result)

| eval EVERYTHING_BAD = if(browser_id != result_id OR mvcount(browser_id) > 1, "baaaaad", null())

| foreach browser_id mode=json_array

[eval browsers = json_delete(json_extract(browsers, <<ITEM>>), "id"),

result = json_extract(result, <<ITEM>>)]

| spath input=browsers

| spath input=result path={} output=result

| mvexpand result

| spath input=result

| spath input=summary

| fields - -* result_id browsers result summary

This is the output based on your mock data; to illustrate result[] array, I added one more mock element.

| browser_id | description | disconnected | error | exitCode | failed | fullName | id | lastResult.disconnected | lastResult.error | lastResult.failed | lastResult.netTime | lastResult.skipped | lastResult.success | lastResult.total | lastResult.totalTime | launchId | log{} | name | skipped | stats | success | suite{} | time |

| ["0123456"] | blahblah | true | true | 12 | 11 | blahblah blahblah | 8 | true | true | 2 | 6 | 3 | 1 | 4 | 5 | 7 | blahblah blahblah | blahblah | true | 0 | true 10 | blahblah blahblah | 9 |

| ["0123456"] | blahblah 9 | true | true | 12 | 11 | blahblah blahblah9 | 9 | true | true | 2 | 6 | 3 | 1 | 4 | 5 | 7 | blahblah 9a blahblah 9b | blahblah | true | 0 | true 10 | blahblah9a blahblah9b | 11 |

In the table, "id" is from results[].

This is the emulation of expanded mock data. Here, I decided to not use format=json because this will preserve the pretty print format, also because Splunk will not show fromjson-style fields automatically. (With real data, fromjson-style fields are not used in 9.x.)

| makeresults

| eval _raw ="

{

\"browsers\": {

\"0123456\": {

\"id\": \"0123456\",

\"fullName\": \"blahblah\",

\"name\": \"blahblah\",

\"state\": 0,

\"lastResult\": {

\"success\": 1,

\"failed\": 2,

\"skipped\": 3,

\"total\": 4,

\"totalTime\": 5,

\"netTime\": 6,

\"error\": true,

\"disconnected\": true

},

\"launchId\": 7

}

},

\"result\": {

\"0123456\": [

{

\"id\": 8,

\"description\": \"blahblah\",

\"suite\": [

\"blahblah\",

\"blahblah\"

],

\"fullName\": \"blahblah\",

\"success\": true,

\"skipped\": true,

\"time\": 9,

\"log\": [

\"blahblah\",

\"blahblah\"

]

},

{

\"id\": 9,

\"description\": \"blahblah 9\",

\"suite\": [

\"blahblah9a\",

\"blahblah9b\"

],

\"fullName\": \"blahblah9\",

\"success\": true,

\"skipped\": true,

\"time\": 11,

\"log\": [

\"blahblah 9a\",

\"blahblah 9b\"

]

}

]

},

\"summary\": {

\"success\": 10,

\"failed\": 11,

\"error\": true,

\"disconnected\": true,

\"exitCode\": 12

}

}

"

| spath

``` the above partially emulates

index="github_runners" sourcetype="testing" source="reports-tests"

```

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, you can't (easily and efficiently) make such "dynamic" extraction.

Splunk is very good at dealing with key-value fields, but it doesn't have any notion of "structure" in data. It can parse out json or xml into flat key-value pairs in several ways (auto_kv, spath/xpath, indexed extractions) but all those methods have some drawbacks as the structure of the data is lost and is only partially retained in field naming.

So if you handle json/xml data it's often best idea (if you have the possibility of course) to influence the event-emiting side so that the events are easily parseable and can be processed in Splunk without much overhead.

Because your data (which you haven't posted a sample of - shame on you 😉) most probably contains something like

{

[... some other part of json ...],

"result": {

"some_event_id": { [... event data... },

"another_event_id": { [... event data ...] }

}

}While it would be much better to have it as

{

[...]

"result": {

{

"id": "first_id",

[... result details ...]

},

{

"id": "another_id",

[... result details ...]

}

}

}It would be much better because then you'd have a static easily accessible field called id

Of course from Splunk's point of view if you managed to flatten the events even more (possibly splitting it into several separate ones) would be even better.

With this format you have, since it's not getting parsed as a multivalued field, since you don't have an array in your json but separate fields, it's gonna be tough. You might try some clever foreach magic but I can't guarantee success here.

An example of such approach is here in the run-anywhere example:

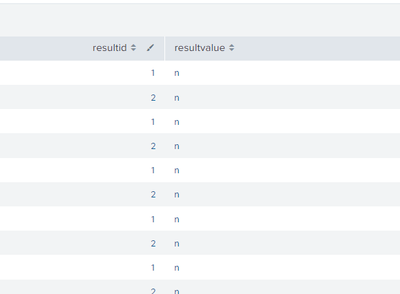

| makeresults

| eval json="{\"result\":{\"1\":[{\"a\":\"n\"},{\"b\":\"m\"}],\"2\":[{\"a\":\"n\"},{\"b\":\"m\"}]}}"

| spath input=json

| foreach result.*{}.a

[ | eval results=mvappend(results,"<<MATCHSTR>>" . ":" . '<<FIELD>>') ]

| mvexpand results

| eval resultsexpanded=split(results,":")

| eval resultid=mvindex(resultsexpanded,0),resultvalue=mvindex(resultsexpanded,1)

| table resultid,resultvalue

But as you can see, it's nowhere pretty.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is the output of the query :

also, example of my event :

browsers: { [+]

}

coverageResult: { [+]

}

libraryPath: libs/funnels

result: { [-]

82348856: [ [+]

]

}

summary: { [+]

}

}- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are 100% sure that you only have one result, and always will have just one result, you could try to brute-force it and:

1) spath the whole result field

2) Extract the result's ID with regex

3) cut the id part from the result field

4) spath the remaining array

Might work, might not (especially that if the rest of the data is similarily (dis)organized, you're gonna have more dynamically named fields deeper down), definitely won't be pretty as manipulating structured data with regexes is prone to errors.

BTW, I don't understand how you got so many results from my example.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you guess what I am going to say?

Please share some anonymised representative sample events so we can see what you are dealing with, preferably in a code block </> to prevent format information being lost

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

browsers: { [+]

}

coverageResult: { [+]

}

libraryPath: libs/funnels

result: { [-]

82348856: [ [+]

]

}

summary: { [+]

}

}- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not valid JSON, it is a formatted version of the JSON. Perhaps I wasn't specific enough.

Please share some anonymised representative sample raw events so we can see what you are dealing with, preferably in a code block </> to prevent format information being lost.

For example, we would want to be able to use the events in a runanywhere / makeresults style search, much like @PickleRick demonstrated

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it's too long to paste it here 😞

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try cutting it down so that it remains valid and representative and then paste it here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is an example to make results :

| makeresults format=json data="[{\"browsers\":{\"0123456\":{\"id\":\"0123456\",\"fullName\":\"blahblah\",\"name\":\"blahblah\",\"state\":0,\"lastResult\":{\"success\":1,\"failed\":2,\"skipped\":3,\"total\":4,\"totalTime\":5,\"netTime\":6,\"error\":true,\"disconnected\":true},\"launchId\":7}},\"result\":{\"0123456\":[{\"id\":8,\"description\":\"blahblah\",\"suite\":[\"blahblah\",\"blahblah\"],\"fullName\":\"blahblah\",\"success\":true,\"skipped\":true,\"time\":9,\"log\":[\"blahblah\",\"blahblah\"]}]},\"summary\":{\"success\":10,\"failed\":11,\"error\":true,\"disconnected\":true,\"exitCode\":12}}]"