- Splunk Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Re: troubleshooting Splunk when the index queue fi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

troubleshooting Splunk when the index queue fill ratio has reached 100% .

Hi All,

We have an Monitoring console and due to a recent release, we observed all the, aggregator queue, typing queue & index queue fill ratio has reached 100%. I have checked indexer performance dashboards in monitoring console, and I wasn't able to find out any relevant error which might have caused it. The data ingestion rate through licensing console looked same as we have every day & Can someone, please point me right steps to troubleshoot this? Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

you said “due to a recent release”. What is this? A new splunk version, a new software realease of your business app or something else?

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @arjitg,

there are many factory to investigate that could cause this issue:

- you have a slow storage (Splunk requires at least 800 IOPS): this is the more common cause,

- you haven't sufficient resources (CPUs) for the logs volume you have to index: this is a frequent cause,

- you have too many regexes used in the typing queue: this shouldn't be the cause because also your index queue rached the 100%.

Check your storage and CPUs resources.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gcusello ,

I checked the data ingested via licensing so there isnt any additional influx of data into Splunk. Can sufficient resources (CPUs) for the logs volume, be an issue then? Also, you mentioned about IOPS. There is no increase in data & its not a new set up, then how can IOPS get impacted? Is there a way we can check more details around what could have impacted the indexqueue?

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. You're _not_ waiting on the indexing queue so it doesn't seem to be the issue of backpressure from the disk not able to keep up with the rate of incoming events or any other configured outputs.

I'd start by checking the OS-level metrics (cpu usage, ram). If _nothing_ else changed "outside" (amount of events, their ingestion rate throughout the day - not only the general summarized license consumption over the whole day, composition of the ingest stream between (split among different sources, sourcetypes and so on)), something must have changed within your infrastructure. There are no miracles 😉

Is this a bare-metal installation or a VM? There could be issues with either oversubscribing resources if that's a VM or even with environment temperature in your DC so your CPUs would get throttled. (yes, I've seen such things).

But if the behaviour changed, something must have changed. Question is what.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @arjitg,

in my opinion the issue is on the CPUs you are using:

how many CPUs have in your Indexer?

How many logs are you ingesting?

what kind of storage are you using?

you can measure the IOPS of your storage using a tool as Bonnie++, remembering that Splunk requires at least 800 IOPS.

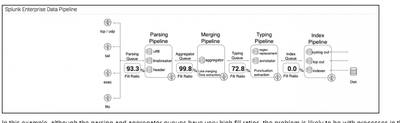

In addition, from your screenshot a see that your index queue is at 0%, so probably the issue is in the Typing pipeline.

have you many Add-ons that parse your data?

only If you have a performant storage (at least 800 IOPS), you can use two parallel pipelines usng in this way more resources, for more infos see at https://docs.splunk.com/Documentation/Splunk/9.2.1/Indexer/Pipelinesets#Configure_the_number_of_pipe... .

Ciao.

Giuseppe