- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Chart Add fields sum of columns and columns larg...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am ruining a search to look for 7705 routers that has rebooted for loss of power.

this is working well, but I wish to add to it

"Old server 0.0.0.0" OR ("Jan 1") OR ("Dec 31")

| eval hourx=strftime(_time, "%m-%d")

| dedup CASCADE hourx | sort host| sort - host _time

| chart count by host hourx where top100

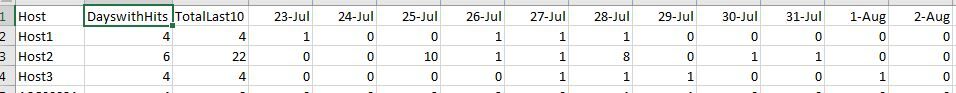

I'd Like to add two Columns to my search the first is a sum of the past days TotalLast10

the second is number of days I had a hit DayswithHits

I'd like it to look like this.

I have been playing with addtotals eventstat and so far haven't had any luck

not sure if this is possible with chart or if I'll need to scrap this approach

Thanks Graham

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Shouldntdothat,

This was a tricky one!

To be completely honest I'm not sure how this will work with larger datasets, and I'm basing the whole search on what I think your original dataset might look like. That said, it is possible, and I want to show you one way of how it is possible!

Copy and paste this into any Splunk instance and you can dissect it to see how the search is working. I've left comments as kind of "checkpoints" to show you what each portion of the search is doing and how it is changing the data.

| gentimes start=7/23/2019 end=1 increment=1d

| rename starttime AS _time

| table _time

| eval values = (random()%19+1).",".(random()%19+1).",".(random()%19+1), Host = "Host1,Host2,Host3"

| eval values = split(values,","), Host = split(Host,",")

| eval newfield = mvzip(Host, values)

| mvexpand newfield

| fields _time newfield

| rex field=newfield "(?<Host>.*?)\,(?<Value>.*)"

| table _time Host Value

| eval randomizer = random()%100

| where randomizer > 55

| fields - randomizer

`comment("Above is just creating a dataset that mimics what I believe your data may look like")`

| timechart sum(Value) AS value_sum by Host

| fillnull value=0

`comment("Using timechart instead of chart so that all days are accounted for, including days with no data.")`

| sort _time

| table _time *

| transpose 0 header_field=_time

| where column != "_span" AND column != "_spandays"

| addtotals

`comment("Formatting data in the same chart format that you have in your spreadsheet and getting a total count for all values by host (See the "Total" field).

Note: The numbers you see as the field headers are epoch timestamps. They translate to July 23, July 24, etc. We will tranlate these numbers to human readable numbers later in the search.")`

| rename * as temp_*

`comment("The foreach command won't work if the first character of a field is a digit, renaming all fields with a "temp_" prefix")`

| foreach *

[| eval <<FIELD>>_unique = if("<<FIELD>>" = "temp_column", temp_column, if("<<FIELD>>" = "temp_Total", temp_Total, if(<<FIELD>> = 0, 0, 1)))]

| eval count = 0

`comment("Creating temporary "_unique" fields for all existing fields that are either a "1" or a "0" to determine if there was a hit that day. In this way, we can find unique daily hits, while preserving our original field values.")`

| foreach *_unique

[| eval count = if(<<FIELD>> = "1", count + 1, count)]

| fields - *_unique

`comment("Calculating a unique number of hits by row (See the "count" field), and then removing the temporary "_unique" fields.")`

| rename temp_* AS *

`comment("Removing the "temp_" prefix to return fields to their original names, since we're done using the foreach command")`

| transpose 0 header_field=column

| eval day = strftime(column, "%d-%b")

| eval day = if(isnull(day), column, day)

| eval column = if(isnum(column), column, null)

| eval column = if(isnull(column), now(), column)

| eval column = if(day = "count", "1", if(day = "Total", "2", column))

| sort column

| fields - column

| table day *

| transpose 0 header_field=day

| rename column AS Host, count AS DaysWithHits, Total AS TotalLast10

`comment("Converting epoch numbers to human readable time for column headers, and sorting headers so that "Hosts" will always come first, followed by "DaysWithHits","TotalLast10", and then reverse chronological order by date.")`

If there are problems, or there is anything you need clarified, let me know!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Shouldntdothat,

This was a tricky one!

To be completely honest I'm not sure how this will work with larger datasets, and I'm basing the whole search on what I think your original dataset might look like. That said, it is possible, and I want to show you one way of how it is possible!

Copy and paste this into any Splunk instance and you can dissect it to see how the search is working. I've left comments as kind of "checkpoints" to show you what each portion of the search is doing and how it is changing the data.

| gentimes start=7/23/2019 end=1 increment=1d

| rename starttime AS _time

| table _time

| eval values = (random()%19+1).",".(random()%19+1).",".(random()%19+1), Host = "Host1,Host2,Host3"

| eval values = split(values,","), Host = split(Host,",")

| eval newfield = mvzip(Host, values)

| mvexpand newfield

| fields _time newfield

| rex field=newfield "(?<Host>.*?)\,(?<Value>.*)"

| table _time Host Value

| eval randomizer = random()%100

| where randomizer > 55

| fields - randomizer

`comment("Above is just creating a dataset that mimics what I believe your data may look like")`

| timechart sum(Value) AS value_sum by Host

| fillnull value=0

`comment("Using timechart instead of chart so that all days are accounted for, including days with no data.")`

| sort _time

| table _time *

| transpose 0 header_field=_time

| where column != "_span" AND column != "_spandays"

| addtotals

`comment("Formatting data in the same chart format that you have in your spreadsheet and getting a total count for all values by host (See the "Total" field).

Note: The numbers you see as the field headers are epoch timestamps. They translate to July 23, July 24, etc. We will tranlate these numbers to human readable numbers later in the search.")`

| rename * as temp_*

`comment("The foreach command won't work if the first character of a field is a digit, renaming all fields with a "temp_" prefix")`

| foreach *

[| eval <<FIELD>>_unique = if("<<FIELD>>" = "temp_column", temp_column, if("<<FIELD>>" = "temp_Total", temp_Total, if(<<FIELD>> = 0, 0, 1)))]

| eval count = 0

`comment("Creating temporary "_unique" fields for all existing fields that are either a "1" or a "0" to determine if there was a hit that day. In this way, we can find unique daily hits, while preserving our original field values.")`

| foreach *_unique

[| eval count = if(<<FIELD>> = "1", count + 1, count)]

| fields - *_unique

`comment("Calculating a unique number of hits by row (See the "count" field), and then removing the temporary "_unique" fields.")`

| rename temp_* AS *

`comment("Removing the "temp_" prefix to return fields to their original names, since we're done using the foreach command")`

| transpose 0 header_field=column

| eval day = strftime(column, "%d-%b")

| eval day = if(isnull(day), column, day)

| eval column = if(isnum(column), column, null)

| eval column = if(isnull(column), now(), column)

| eval column = if(day = "count", "1", if(day = "Total", "2", column))

| sort column

| fields - column

| table day *

| transpose 0 header_field=day

| rename column AS Host, count AS DaysWithHits, Total AS TotalLast10

`comment("Converting epoch numbers to human readable time for column headers, and sorting headers so that "Hosts" will always come first, followed by "DaysWithHits","TotalLast10", and then reverse chronological order by date.")`

If there are problems, or there is anything you need clarified, let me know!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you exactly what I needed.

the comments especially were very helpful