- Splunk Answers

- :

- Splunk Administration

- :

- Installation

- :

- How to solve TailReader and buckets errors

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

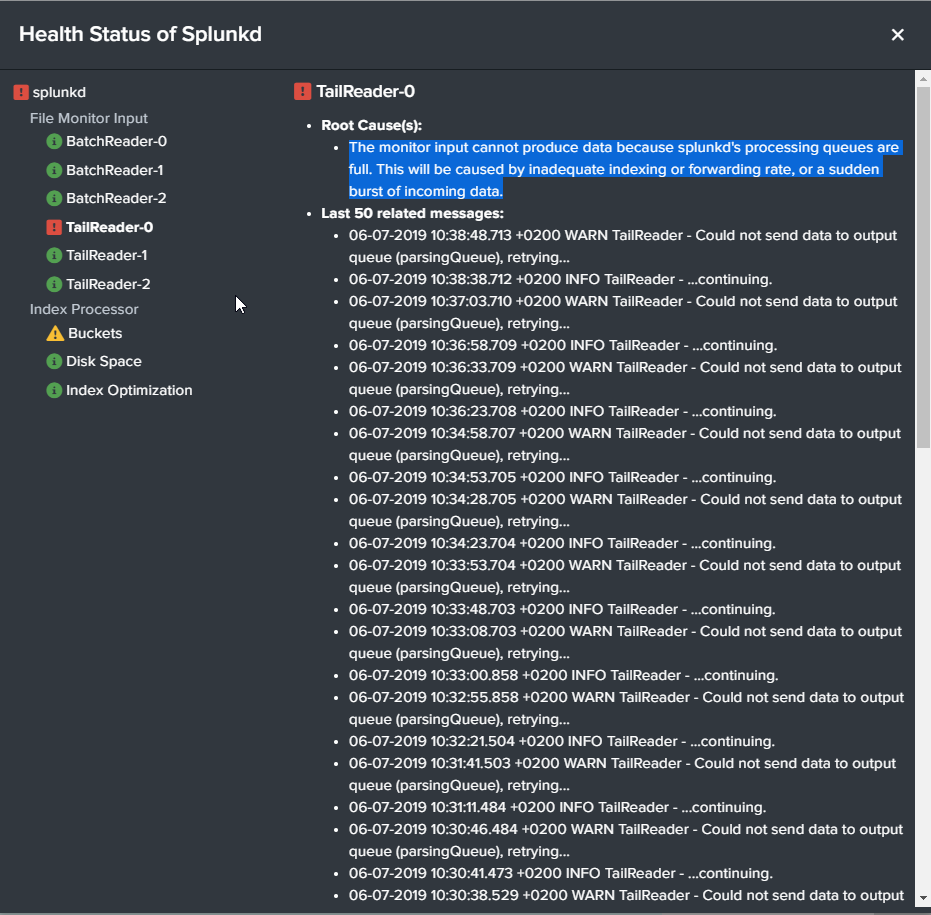

How to solve TailReader and buckets errors

Hi, we have upgraded to 7.2.6 and we are getting errors all the time now. Today we have used 3 GB of our licenses- so not much, however, I have issues below (Images).

I have 3 questions:

1) We have a single install (1 search head and 1 indexer) and thinking of moving to 5 indexers and 1 search head, will this help this issue?

2) What happens when the tail reader is full, who suffers what are the impacts, how can I help this?

3) What happens when buckets are full, who suffers what are the impacts, how can I help this?

Thanks in advance

Rob

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have just adjusted the parameter maxQueueSize in the outputs.conf file to a grater value in the HeavyForwarder, default is 500Kb. That have solved the issue.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

the first questions that come to my mind are the following:

- From which version did you upgrade to 7.2.6?

- Which splunk instances are reporting these errors? Only Universal Forwarders? All of them and if not, which versions are affected?

1) We have a single install(1 search

head and 1 indexer) and thinking of

moving to 5 indexers and 1 search

head, will this help this issue?

How many GB per day are you ingesting? Why do you want to move to 5 indexers right away? Do you have any experience in building indexer clusters?

Moving to 5 indexers would not be advisable without further information. One indexer, under optimal conditions, should be able to index 100+ GB per day depending on some factors like the amount of scheduled searches and ad-hoc searches being ran by users.

2) What happens when the tail reader

is full, who suffers what are the

impacts, how can i help this?

You are experiencing issues that might be due to timestamping problems. Make sure your data getting indexed has the correct settings applied and _time gets extracted correctly. This thread on answers is also a good starting point for troubleshooting.

3) What happens when buckets are full,

who suffers what are the impacts, how

can i help this?

A high number of buckets created should be avoided. Because of how Splunk works, it will impact your performance when many buckets have to be opened and kept open (due to the mentioned timestamping problems for example).

Skalli

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Thanks for the answer.

To answer some questions.

From which version did you upgrade to 7.2.6? -

[RL]we came from 7.1.6.

How many GB per day are you ingesting? Why do you want to move to 5 indexers right away? Do you have any experience in building indexer clusters?

[RL]We are ingesting 40Gb per day on a busy day, but today when i have the issue we have done 3GB.

We are thinking of increasing our indexers anyway due to other issues and i wanted to know it it would help here? I dont mind how many i go to but i just want to know if that will help this issue, I have some experience in setting it up.

Some of our data does not have a time stamps, i am not sure if i can do anything about that as i needed it. I know the specific source type its GC data with no timestamp.

Thanks in advance for any advice

Rob

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @robertlynch2020 , did you find any solution to this issue?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have the Same issue, since i started monitoring my log files on an other splunk server.

Did you manage to solve your problem?

BR,

Fatma.