- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why is field ingestion not working when ingesting ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why is field ingestion not working when ingesting custom CSV data?

Hello,

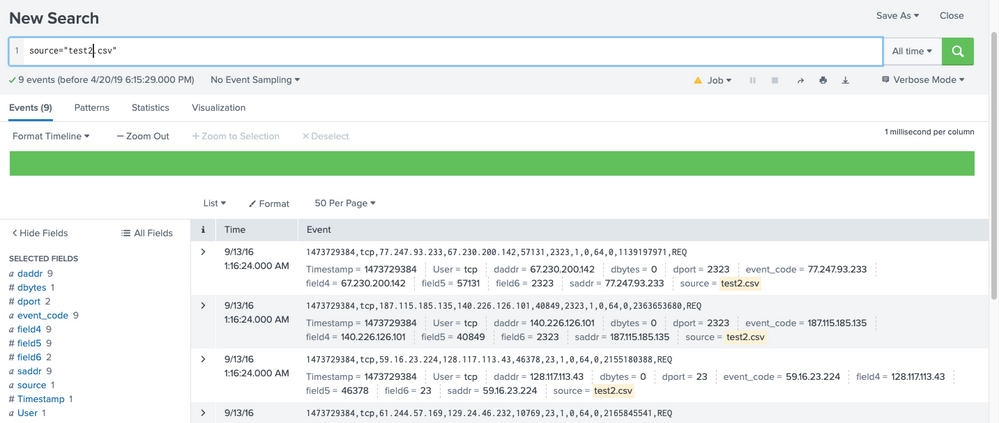

I am trying to ingest some custom CSV data that I have created. In some of the data it extracts the correct fields, but then also shows duplicate fields as "field 1", "field 2", etc.

Any ideas how to have the data ingest correctly? Attached is a screenshot.

I have tried creating field aliases and rules when ingesting but I am not having any luck.

Thank you in advance for any help!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

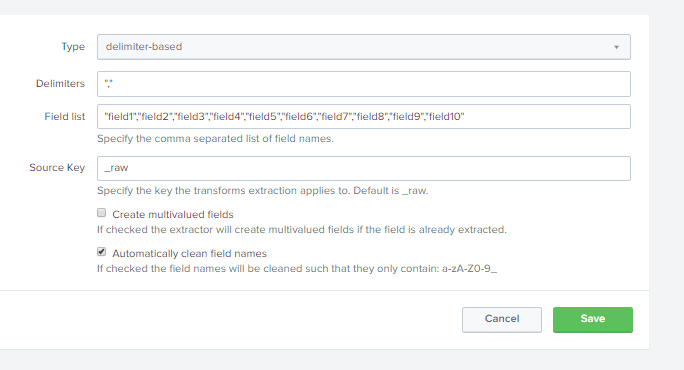

It looks like you set up a field extraction without editing the field names and then saved it.

To remove them, you will need to remove the field extraction. Go to Settings->Fields->Field Extractions. Make sure you have the right app selected. Filter by the name of your sourcetype. If you don't know that, sort by owner and look for your username. Delete the entry that you did not mean to create.

If you see multiple entries and are unsure which one is yours go to Settings->Fields->Field Transoformations. Sort by owner and click into the ones owned by your username. Look for the one that has a field list similar to the one below:

Take note of the name on that screen at the upper left hand corner. Go back to Settings->Fields->Field Extractions. Find the extraction that has your name as owner with that transformation name in its name. Delete it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@grantccarlson you would need to share your props.conf and transforms.conf applicable for this sourcetype. Would it be possible for you to do so?

Also if possible share sample data file with CSV Header row and at-least one data row. Kindly mock/anonymize any sensitive information before sharing data/code.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I'm not sure where to find the props.conf and transforms.conf files. I will attach a screenshot to show the data file.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Timestamp proto saddr daddr sport dport spkts dpkts sbytes dbytes stcpb State

1473729384 tcp 94.255.165.163 140.226.22.228 23237 23 1 0 64 0 2363627212 REQ

1473729384 tcp 36.69.63.66 164.47.8.18 43092 2323 1 0 64 0 2754545844 REQ

1473729384 tcp 41.174.140.215 161.98.74.245 48716 2323 1 0 64 0 2707573431 REQ

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your screenshot and your description do not match. There are no duplicated fields shown.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Field 6" is the same as "dport"

"field 4" is the same as "daddr"

"event_code" is the same as "saddr"

All of the above are the same field values with 2 different names...Sorry! Duplicate fields is probably not the best wording.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the first line of the csv file and how are you getting it in? Are you using INDEXED_EXTRACTIONS=csv?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the first line of the csv file is the CIM field names that correspond to the data. I don't think that I am using indexed_extractions=csv.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are the props.conf and transforms.conf settings for the sourcetype?

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure. How do you check?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You read the .conf files or use btool. Since you asked, however, I'll assume you did not change them.

How did you ingest the CSV file? Did you use the Add Data wizard?

If this reply helps you, Karma would be appreciated.