- Splunk Answers

- :

- Using Splunk

- :

- Alerting

- :

- Why alert is not triggering on exact time

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

From the past one week I have been looking into my alert jobs. I found that alerts are triggering 4 minutes before from the actual trigger time. Because of this time change i missed lot of alerts. May I know the reason, why Splunk Enterprice is considering actual time?

I am attaching screenshots for more understanding

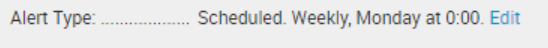

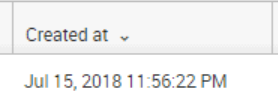

The below image shows my alert trigger tim

The below image shows the alert triggered time. Based on trigger condition it should get trigger today at 12:00 AM but it got triggered yesterday 11:56 PM.

Please explain me if anyone know the reason behind this issue.

Thanks in Advance,

Chandana

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you are misinterpreting that time stamp. Just because the search was "created" shortly before the scheduled time does not mean that it ran early.

If you add this code to the end of the alert, you will see the actual time range that is covered.

| append [| makeresults | addinfo

| eval Time = strftime(_time,"%Y-%m-%d %H:%M:%S")

| eval Info_min_time=strftime(info_min_time,"%Y-%m-%d %H:%M:%S")

| eval Info_max_time=strftime(info_max_time,"%Y-%m-%d %H:%M:%S")

| eval Info_search_time=strftime(info_search_time,"%Y-%m-%d %H:%M:%S")

| eval Now=strftime(now(),"%Y-%m-%d %H:%M:%S")

| table Time Now Info_min_time Info_max_time Info_search_time

]

info_min_time and info_max_time are the time bounds for events selected by the search. Info_search_time is the time the search was created. Now is the time the search started. Time is the time the makeresults command generated its output event, which is roughly a second after now().

More likely, the problem is that events can take a few seconds (or more) to be indexed. that is the reason that the normal practice is to schedule such a job to run a few minutes after the hour, rather than immediately on the hour.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you are misinterpreting that time stamp. Just because the search was "created" shortly before the scheduled time does not mean that it ran early.

If you add this code to the end of the alert, you will see the actual time range that is covered.

| append [| makeresults | addinfo

| eval Time = strftime(_time,"%Y-%m-%d %H:%M:%S")

| eval Info_min_time=strftime(info_min_time,"%Y-%m-%d %H:%M:%S")

| eval Info_max_time=strftime(info_max_time,"%Y-%m-%d %H:%M:%S")

| eval Info_search_time=strftime(info_search_time,"%Y-%m-%d %H:%M:%S")

| eval Now=strftime(now(),"%Y-%m-%d %H:%M:%S")

| table Time Now Info_min_time Info_max_time Info_search_time

]

info_min_time and info_max_time are the time bounds for events selected by the search. Info_search_time is the time the search was created. Now is the time the search started. Time is the time the makeresults command generated its output event, which is roughly a second after now().

More likely, the problem is that events can take a few seconds (or more) to be indexed. that is the reason that the normal practice is to schedule such a job to run a few minutes after the hour, rather than immediately on the hour.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response. My alerts are triggering before the hour not after the hour. I'll use your query to make sure the selected time range.

Thanks,

Chandana

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we need more information.

whats the alert condition?

how are you saving that file?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chandana,

are there any other trigger conditions mentioned?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

nope, it'll trigger based on number of results.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it's a scheduled alert. It'll trigger every Monday at 12:00 AM. The output file save as PDF.

I have been using this alert from past one and half month. It was triggering on exact time as specified trigger time. But from the last week it's getting trigger as mentioned above.