- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Transaction by field not grouping correctly when a...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

I have an index storing information about network connections which receives information of such connections every five (5) minutes. Each event has an identifier ( id), which states the connection that the event belongs to. Then, I need to group the events by its id so I can compute traffic differences and other stats per connection.

When I run the command for a single device (by filtering by src prior the transaction command), all connections for the given device are properly extracted. This is the command:

index=xxx event_type=detailed_connections earliest=11/24/2017:13:00:0 latest=11/24/2017:15:00:0 src=/P1zWkJszeaoJTZBVDI8ow

| transaction id mvlist=true keepevicted=true maxspan=-1 maxpause=-1 maxevents=-1

| table src id bytes_in eventcount closed_txn

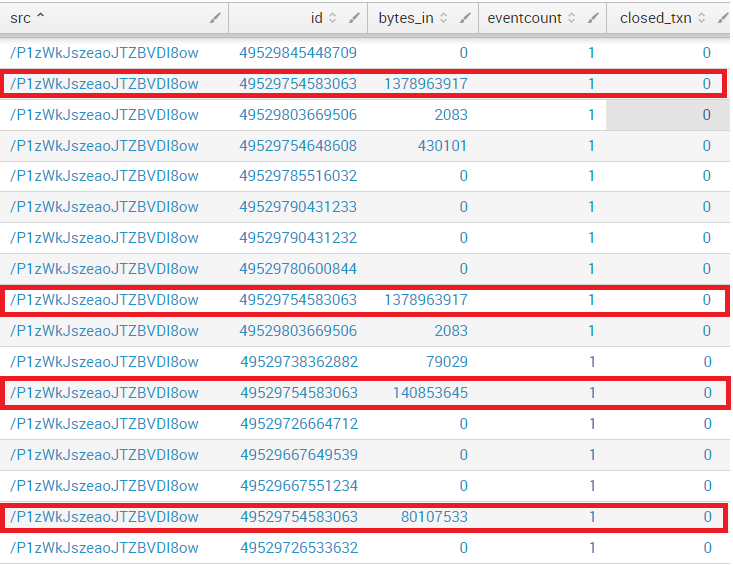

And these are the results. Let's focus, for instance, in the connection with id = 49529754583063. We can see that the transaction is composed of 13 events with increasing traffic ( bytes_in). This is perfectly fine.

However, when running the same command BUT WITHOUT SPECIFYING ANY DEVICE (no src filtering before the transaction command),

index=nexthink event_type=detailed_connections earliest=11/24/2017:13:00:0 latest=11/24/2017:15:00:0

| transaction id mvlist=true keepevicted=true maxspan=-1 maxpause=-1 maxevents=-1

| table src id bytes_in eventcount closed_txn

I realized that some events are not grouped as they should.

Focusing on the same connection as before, I can see several different transactions with the same id, but eventcount equal to 1 (just showing some of them, not all of them).

As a result, I cannot compute trustworthy stats for all devices, and running the same command over and over again device by device is not acceptable.

As you can see, I've removed the limit for maxspan, maxevents, maxpause, and I'm keeping the evicted ones. What am I doing wrong? Is this actually a bug (I don't think so)? How can I get transactions properly grouped when working with thousands of events?

Thanks in advance for the support!

Regards,

Leo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@leosanchezcasado, you should refer to following documentation to choose correct correlation method based on scenarios (PS: this is not always applicable and there is no hard and fast rule for these) : http://docs.splunk.com/Documentation/Splunk/latest/Search/Abouteventcorrelation

In your case you should go for stats command which would perform better than transaction and also will not loose events. Refer to the following stats equivalent of the transaction search you are facing issues with.

index=nexthink event_type=detailed_connections earliest=11/24/2017:13:00:0 latest=11/24/2017:15:00:0

| stats count as eventcount sum(bytes_in) earliest(_time) as earliestTime latest(_time) as latestTime by src id

| eval duration=latestTime-earliestTime

| eval earliestTime=_time

| fieldformat latestTime=strftime(latestTime,"%Y/%m/%d %H:%M:%S")

PS: The code is untested but do let me know in case something is broken in this search.

For the correlated events if you really do not wish to sum the bytes together you can use list(bytes_in) or values(bytes_in) instead. Here values() retains only unique bytes as multi-valued field and list() retains all values as multi-valued field however, limits to first 100 values only.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@leosanchezcasado, you should refer to following documentation to choose correct correlation method based on scenarios (PS: this is not always applicable and there is no hard and fast rule for these) : http://docs.splunk.com/Documentation/Splunk/latest/Search/Abouteventcorrelation

In your case you should go for stats command which would perform better than transaction and also will not loose events. Refer to the following stats equivalent of the transaction search you are facing issues with.

index=nexthink event_type=detailed_connections earliest=11/24/2017:13:00:0 latest=11/24/2017:15:00:0

| stats count as eventcount sum(bytes_in) earliest(_time) as earliestTime latest(_time) as latestTime by src id

| eval duration=latestTime-earliestTime

| eval earliestTime=_time

| fieldformat latestTime=strftime(latestTime,"%Y/%m/%d %H:%M:%S")

PS: The code is untested but do let me know in case something is broken in this search.

For the correlated events if you really do not wish to sum the bytes together you can use list(bytes_in) or values(bytes_in) instead. Here values() retains only unique bytes as multi-valued field and list() retains all values as multi-valued field however, limits to first 100 values only.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @niketnilay,

Thanks so much for the information!

I've now been able to compute what I wanted by using the grouping you suggested. I've had to tune it a little bit, as I didn't want to sum up all bytes_in, but differences between bytes_in from the latest and earliest event in each connection. But using the values() command I've been able convert it to a multi-value field and compute differences. Thanks so much again!

However, I still don't know WHY the transaction command is losing events when the amount of data is huge. That's why I'm not accepting your answer yet (although it is a perfect work-around for my situation).

If nobody provides a proper explanation in a couple of days, I will accept yours 🙂

Regards,

Leo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@leosanchezcasado, please refer to documentation on when to use stats vs transaction:

http://docs.splunk.com/Documentation/Splunk/latest/Search/Abouttransactions#Using_stats_instead_of_t...

http://docs.splunk.com/Documentation/Splunk/latest/Search/Abouteventcorrelation

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @niketnilay,

Thanks so much for the info. I'm going to accept your answer, as no one else is providing more information and with the stats command I'm able to achieve what I wanted.

However, I still don't know whether the "wrong" extraction of transactions in my environment is a bug in Splunk or something I did wrong in the search...

Anyway, thanks so much for the help and support! Highly appreciated :).

Best regards,

Leo