- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Splunk Add-on for Amazon Web Services: Why are inj...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk Add-on for Amazon Web Services: Why are injested JSON event fields not extracted using a custom sourcetype for Kinesis stream?

Objective

Using the Splunk Add-on for Amazon Web Services to ingest events from AWS Kinesis with a custom sourcetype.

Issue

Ingested json event fields are not extracted when using custom sourcetype.

What I have tried

I have created Kinesis inputs to read from the stream. One with the sourcetype = aws:kinesis (as specified in the documentation here http://docs.splunk.com/Documentation/AddOns/released/AWS/Kinesis) and one with a custom sourcetype.

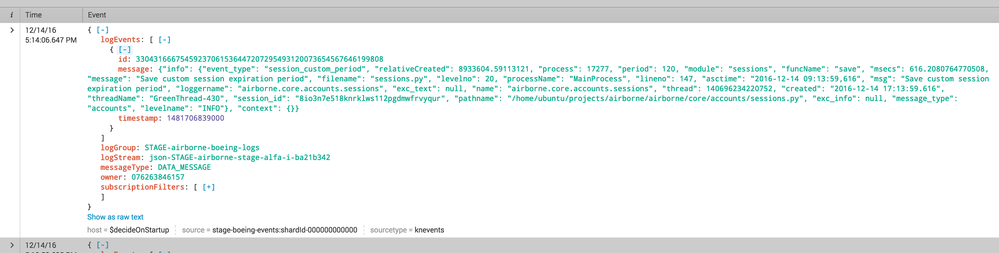

The custom sourcetype events do not have extracted json fields. (see picture attached).

The standard sourceytpe events do have extracted json fields.

I have tested this sourcetype using oneshot to place json data into a test index and the fields were extracted correctly.

Create indices

/opt/splunk/bin# ./splunk add index fromkinesis

/opt/splunk/bin# ./splunk add index bythebookkn

/opt/splunk/bin# ./splunk add index oneshottest

Test sourcetype with oneshot

/opt/splunk/bin# ./splunk add oneshot /opt/splunk/data/test.json -sourcetype myevents -index oneshottest

Kinesis inputs

/opt/splunk/etc/apps/Splunk_TA_aws/local# cat aws_kinesis_tasks.conf

[bythebookkn]

account = splunk

encoding =

format = CloudWatchLogs

index = bythebookkn

init_stream_position = LATEST

region = us-east-1

sourcetype = aws:kinesis

stream_names = stage-my-events

[fromkinesis]

account = splunk

encoding =

format = CloudWatchLogs

index = fromkinesis

init_stream_position = LATEST

region = us-east-1

sourcetype = myevents

stream_names = stage-my-events

Sourcetype

/opt/splunk/etc/system/local# cat props.conf

TRUNCATE = 800000

[myevents]

INDEXED_EXTRACTIONS = json

TIMESTAMP_FIELDS = info.created

TIME_FORMAT = %Y-%d-%m %H:%M:%S.%3Q

TZ = UTC

detect_trailing_nulls = auto

SHOULD_LINEMERGE = false

KV_MODE = none

AUTO_KV_JSON = false

category = Custom

disabled = false

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you need to remove format=CloudWatchLogs because that strips the JSON wrapper. Set it to "none" and try again.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mreynov_splunk This change does not achieve my objective.

The result is that I get a well extracted json document that is a kinesis event. Meanwhile my log messages (which are also json) are a text field in the Kinesis json called "message" and are totally not parsed as json.

{

"logGroup":"STAGE-airborne-boeing-logs",

"owner":"076263846157",

"logStream":"json-STAGE-airborne-stage-alfa-i-ba21b342",

"subscriptionFilters":[

"stage-airborne-boeing"

],

"messageType":"DATA_MESSAGE",

"logEvents":[

{

"id":"33043166675459237061536447207295493120073654567646199808",

"message":"{\"info\": {\"event_type\": \"session_custom_period\", \"relativeCreated\": 8933604.59113121, \"process\": 17277, \"period\": 120, \"module\": \"sessions\", \"funcName\": \"save\", \"msecs\": 616.2080764770508, \"message\": \"Save custom session expiration period\", \"filename\": \"sessions.py\", \"levelno\": 20, \"processName\": \"MainProcess\", \"lineno\": 147, \"asctime\": \"2016-12-14 09:13:59,616\", \"msg\": \"Save custom session expiration period\", \"loggername\": \"airborne.core.accounts.sessions\", \"exc_text\": null, \"name\": \"airborne.core.accounts.sessions\", \"thread\": 140696234220752, \"created\": \"2016-12-14 17:13:59.616\", \"threadName\": \"GreenThread-430\", \"session_id\": \"8io3n7e518knrklws112pgdmwfrvyqur\", \"pathname\": \"/home/ubuntu/projects/airborne/airborne/core/accounts/sessions.py\", \"exc_info\": null, \"message_type\": \"accounts\", \"levelname\": \"INFO\"}, \"context\": {}}",

"timestamp":1481706839000

}

]

}

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hmm... it should work if it is proper JSON throughout. This is the first question to answer. If not, then yea, you are in a pickle.

either way, it makes sense to start from Kinesis, because at least it handles the JSON wrapper for you.

Send me a sample and I can try it. (I am assuming the sample above is not how your data looked like coming in; I am specifically interested in the back slashes)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello... @mreynov_splunk can you help?

Is this an actual bug like before or am I doing something wrong?