- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How can we distribute files from HDFS to be indexe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How can we distribute files from HDFS to be indexed evenly across our four indexers?

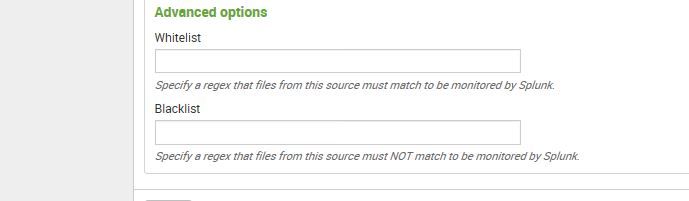

We have an HDFS source with sqoop files that have this naming pattern - 000000_0 to 003064_0 and each file is at the same size. We would like, in each of the four indexers, to specify in the Data inputs > Files & directories section via the Whitelist or the Blacklist, that we need one quarter of these files to process.

Any ideas?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are using a forwarder, are you looking for this document? How to setup load balancing with a forwarder:

http://docs.splunk.com/Documentation/Splunk/6.5.1/Forwarding/Setuploadbalancingd#How_load_balancing_...

Load-balancing will ensure that data is distributed among your indexers.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To begin with, we want to statically distribute the HDFS data into the four indexers in a uniform way. Since the sqoop files are called 000000_0 to 003064_0, then we can assign, for the first indexer for example, something like 000000_0 to 000766_0 which is 003064 / 4. Meaning, assigning this range in the Whitelist.

Maybe you can suggest a more creative solution...