- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Splitting JSON Respone into Rows for each event - ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using the REST API app and I'm having a bit of trouble figuring out the response handlers and/or Splunk dealing with JSON data.

I have a very large JSON file that gets returned, and I want to create a entry for each row in the data, but the data isn't paired up directly like in most examples I've seen in the help sections.

Here's an example of the data

{

"columns": [

{

"type": "dimension",

"name": "Time",

"description": "Time as per time zone specified in the report",

"index": 0

},

{

"type": "dimension",

"name": "Country",

"description": "Country from which viewer requested media ",

"index": 1

},

{

"type": "metric",

"name": "Plays with Rebuffers",

"description": "Number of Plays with Rebuffers",

"index": 2,

"aggregate": "418",

"peak": "156",

"unit": null

}

],

"rows": [

[

"1415837040",

"US"

"156"

],

[

"1415837040",

"CH"

"136"

],

[

"1415836980",

"US"

"113"

],

[

"1415836980",

"CH"

"103"

],

[

"1415836920",

"US"

"95"

],

],

"metaData": {

"aggregation": 60,

"limit": 5,

"startTimeInEpoch": 1415836800,

"hasMoreData": false,

"timeZone": "GMT",

"offset": 0,

"reportPack": "HDS Qos Test",

"endTimeInEpoch": 1415837100

}

}

At the End of the day I want to end up with the data for each row just pulling the name field out of the columns, and discarding the metadata

Time=1415837040 Country=US "Plays with Rebuffers"=156

Time=1415837040 Country=CH "Plays with Rebuffers"=136

ETC

I also need to make the _time for the entry the same as the time returned in the row. It looks like I need to do something like this in my props file for my source type, but that won't work until I get the parsing by row working.

TIME_PREFIX = Time=

TIME_FORMAT = %s

Thanks in advance for your time for anyone looking at this or responding.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this code in responsehandlers.py

class YourJSONArrayHandler:

def __init__(self,**args):

pass

def __call__(self, response_object,raw_response_output,response_type,req_args,endpoint):

if response_type == "json":

raw_json = json.loads(raw_response_output)

column_list = []

for column in raw_json['columns']:

column_list.append(column['name'])

for row in raw_json['rows']:

i = 0;

new_event = {}

for row_item in row:

new_event[column_list[i]] = row_item

i = i+1

print print_xml_stream(json.dumps(new_event))

else:

print_xml_stream(raw_response_output)

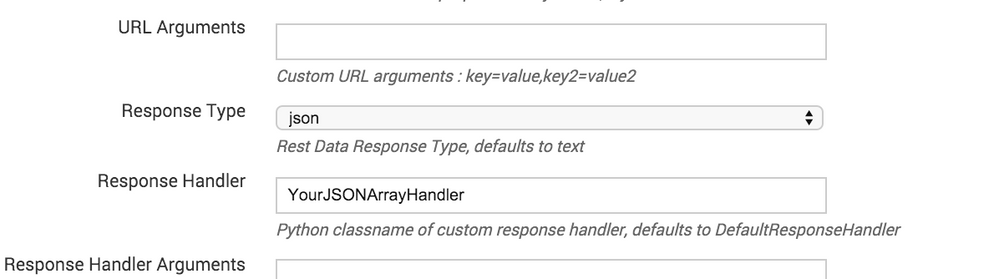

And wire it up in your stanza config :

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this code in responsehandlers.py

class YourJSONArrayHandler:

def __init__(self,**args):

pass

def __call__(self, response_object,raw_response_output,response_type,req_args,endpoint):

if response_type == "json":

raw_json = json.loads(raw_response_output)

column_list = []

for column in raw_json['columns']:

column_list.append(column['name'])

for row in raw_json['rows']:

i = 0;

new_event = {}

for row_item in row:

new_event[column_list[i]] = row_item

i = i+1

print print_xml_stream(json.dumps(new_event))

else:

print_xml_stream(raw_response_output)

And wire it up in your stanza config :

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response! I had no idea you would answer so quickly.

I had another version of this going, and I combine what you wrote, with what I had going. So far it's working great other than some issues with the data source.

class AkamaiColumnRowsJSONHandler:

def __init__(self,**args):

pass

def __call__(self, response_object,raw_response_output,response_type,req_args,endpoint):

if response_type == "json":

raw_json = json.loads(raw_response_output)

columns = [str(row['name']).replace(" ","") for row in raw_json['columns']]

for row in raw_json['rows']:

new_event = {}

for a,b in zip(columns, row):

if not b: #Skip Null Valued Pairs

continue

new_event[a] = b

print_xml_stream(json.dumps(new_event))

else:

print_xml_stream(raw_response_output)

I'm really glad I was closer than I thought to a viable solution at the time I ran here for help. You and your tool are fantastic sir.