- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Why are the timestamps different when indexing CSV...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having an issues with timestamps on CSV files. Here is what a sample of raw data looks like:

DATE,HOUR,WORKFILE_COUNT,RATE_PER_WORKFILE,EST_NBR_WORKFILES_PER_HOUR,WORK_HOLD_FILE_COUNT,WORK_DONE_FILE_COUNT,UNPROCESSED_FILE_COUNT,BAD_FILE_COUNT

09/13/15,15,1172,247.238,14560.9,0,0,0,13

09/13/15,16,1190,247.011,14574.3,0,0,0,13

09/13/15,17,1217,227.313,15837.2,0,0,0,13

09/13/15,18,782,231.839,15528,0,0,0,13

09/13/15,19,648,240.61,14962,0,0,0,13

09/13/15,20,629,238.669,15083.6,0,0,0,13

09/13/15,21,394,242.297,14857.8,0,0,0,13

09/13/15,22,493,295.181,12195.9,0,0,0,13

09/13/15,23,257,280.949,12813.7,0,0,0,13

09/14/15,00,64,235.125,15311,0,0,0,13

Here is the props.conf in /etc/system/local on the indexer:

[csv]

NO_BINARY_CHECK = true

TIMESTAMP_FIELDS = DATE,HOUR

TIME_FORMAT = %m/%d/%y%n%k

disabled = false

Now when I index locally using the CSV sourcetype, everything looks good and the timestamp is correct:

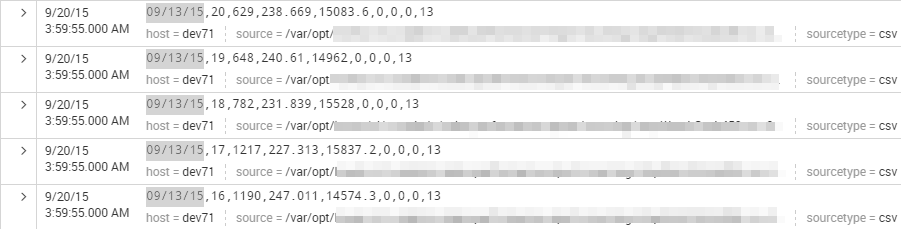

Now, when I have the same file sent via universal forwarder, the modtime is used as the timestamp, not the DATE, HOUR columns in the csv file:

I have tried a variety of things with the props.conf file, but all seems to be failing when it is sent via the forwarder.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ragedsparrow,

I guess your INDEXED_EXTRACTIONS = csv is configured in the forwarder's props.conf (Splunk v6) then the forwarder will be parsing the csv file into a structured stream first and then passing it to the indexer ready for immediate indexing (i.e. the indexer will not parse the file again).

Refer to the following in the docs: http://docs.splunk.com/Documentation/Splunk/6.2.2/Data/Extractfieldsfromfileheadersatindextime#Cavea...

You will need to deloy your props configuration to your forwarders, or define another sourcetype for the csv files and ensure the INDEXED_EXTRACTIONS does not happen on the forwarder so the data is forwarded to the indexer in an unstructured state.

Hope this makes sense and helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ragedsparrow,

I guess your INDEXED_EXTRACTIONS = csv is configured in the forwarder's props.conf (Splunk v6) then the forwarder will be parsing the csv file into a structured stream first and then passing it to the indexer ready for immediate indexing (i.e. the indexer will not parse the file again).

Refer to the following in the docs: http://docs.splunk.com/Documentation/Splunk/6.2.2/Data/Extractfieldsfromfileheadersatindextime#Cavea...

You will need to deloy your props configuration to your forwarders, or define another sourcetype for the csv files and ensure the INDEXED_EXTRACTIONS does not happen on the forwarder so the data is forwarded to the indexer in an unstructured state.

Hope this makes sense and helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gcato, that is exactly what was happening (a good indicator, I need to read documentation more). I was modifying my props.conf file on the indexer and not on the forwarder, that's why the timestamps were working on my test data that I was bringing in locally on the indexer and not on the forwarded data. Thank you so much for helping with this headache for me.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad it helped. A case of been there, done that for me.