- Apps and Add-ons

- :

- All Apps and Add-ons

- :

- Splunk App for Stream: How to resolve congestion i...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

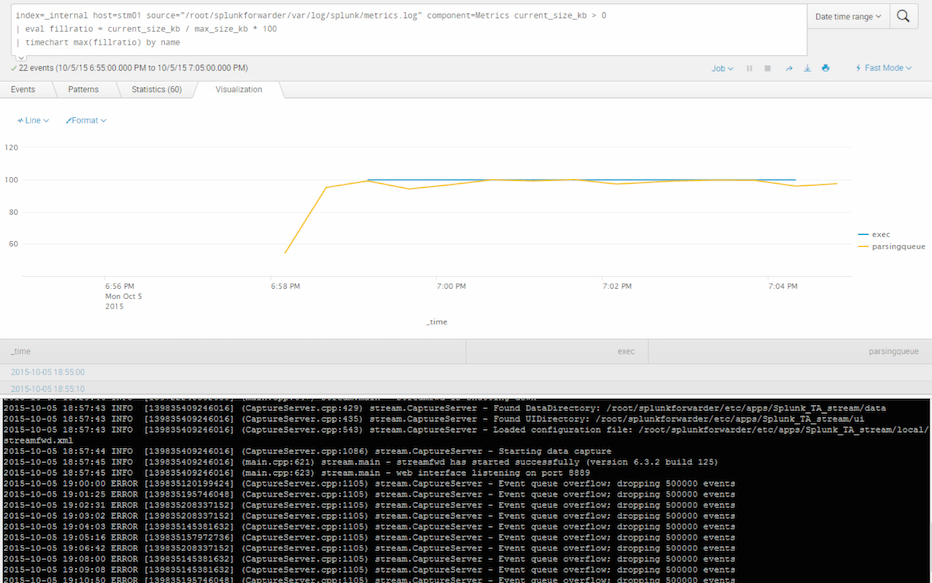

Splunk App for Stream: How to resolve congestion in parsing queue after starting the universal forwarder?

Hello Stream experts,

I'm doing a stress test with the streamfwd by capturing many short-live TCP traffics over 35000 cps.

Splunk App for stream is running on a universal forwarder and sending the captured data to a remote indexer.

I configured it as follows:

- Splunk 6.3 in both sides

- Stream app version is 6.3.2

- maxKBps = 0 on UF side

- increased all queue size of UF including exec and parsingQueue to over 30MB

- applied the recommended tcp parameters in UF and Indexer node.

- set streamfwd.xml ( ProcessingThreads : 8, PcapBufferSize : 127108864, TcpConnectionTimeout : 1, MaxEventQueueSize : 500000 )

As you can see from the chart in the attached image, the parsingQueue piles up very quickly very after starting the UF. The exec queue problem follows it immediately and the stream's event queue also piled up.

Strangely, I couldn't find any congestion from indexer queues at that moment.

What should I check to resolve this problem?

Thank you in advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My problem was caused by "compressed=true" option in outputs.conf. If there is a heavy load (like performance test scenario), the parsingQueue of UF was easily filled up with this option.

My problem was resolved by setting it to false and having bigger maxQueueSize (10MB in my case) at outputs.conf.