- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to get complex transactions over two fields

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to get complex transactions over two fields

My transactions consist of two fields named JOBID and SUBJOBID. A typical search result contains events like

JOBID=901031

JOBID=901031 SUBJOBID=441

JOBID=901031 SUBJOBID=640022429

SUBJOBID=441

SUBJOBID=640022429

JOBID=901031

JOBID=901031 SUBJOBID=472

JOBID=901031 SUBJOBID=740022431

SUBJOBID=472

SUBJOBID=740022431

JOBID=901031

How to use the transaction command to get all these events belonging to JOBID=901031 like shown above?

I've tried using the command transaction JOBID, SUBJOBID mvlist=true but splunk returned four Events and not the expected single one:

Transaction 1:

JOBID=901031

JOBID=901031 SUBJOBID=441

SUBJOBID=441

Transaction 2:

JOBID=901031 SUBJOBID=640022429

SUBJOBID=640022429

Transaction 3:

JOBID=901031

JOBID=901031 SUBJOBID=472

SUBJOBID=472

Transaction 4:

JOBID=901031 SUBJOBID=740022431

SUBJOBID=740022431

JOBID=901031

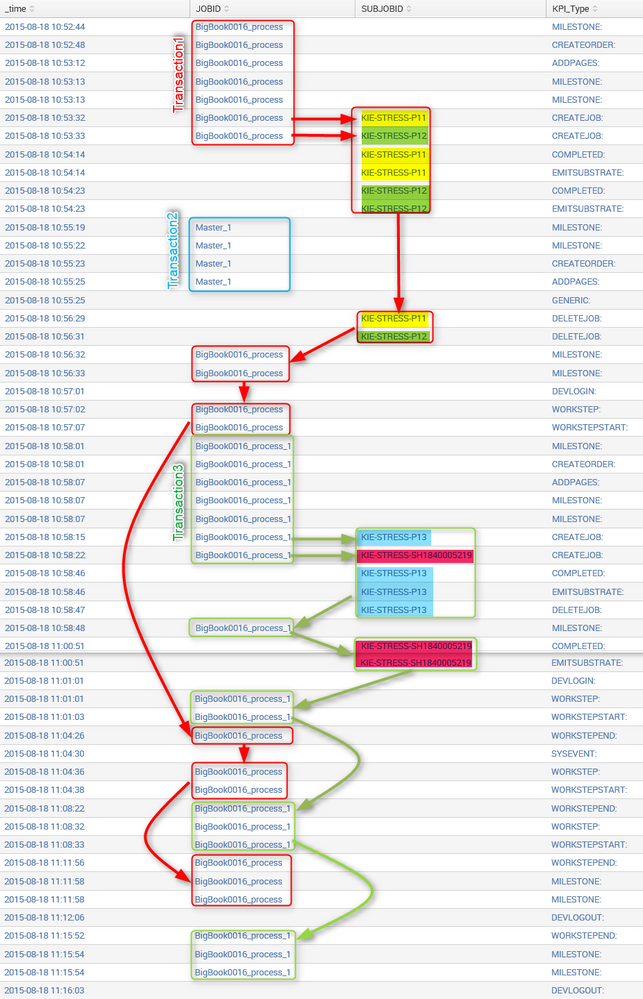

Maybe the following graphic illustrated my complex transaction topic a little less abstract. Shown here: three transactions within one log snippet (the red framed, the blue framed and the green framed). The red and green one have events related by SUBJOBIDS to the main transaction (JOBID). Going down the log, the transactions can be found nested into each other and furthermore there are events not belonging to any transaction -- just like in real (server log) life...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a tricky problem to solve. My suggestion is a little complicated but should work. I would:

- Create a key that will tie together all JobID's and SubJobID's.

- Create a lookup that contains the JobID, SubJobID, and newly created key.

- Use the lookup in your transaction search.

- (optional) Set up a scheduled search job that will keep the lookup updated with new keys as new information is added. I'd do this only if you will need to create this kind of transaction fairly often.

So first create a key where each record consists of a JobID and all of that JobID's SubJobID's. In the search, also include the fields that will be needed for correlation later, which are JobID and SubJobID. Your search might look something like this:

... base search here ... | stats values(SUBJOBID) as SUBJOBID by JOBID | eval key=JOBID + "-" + mvjoin(SUBJOBID,"-") | mvexpand SUBJOBID

Export the results of the above search as a csv file (either through | outputcsv or through the export button on the GUI) and use it to set up a new lookup. The JOBID and SUBJOBID fields should be set as input fields and the key field should be an output field. For information on how to create a lookup, see the docs here. For the rest of this answer, I'll refer to this lookup as keyLookup.

Now you can use keyLookup in a search to add a key to each record with a JOBID or SUBJOBID, then create a transaction based on key:

... base search here ... | lookup keyLookup JOBID as JOBID OUTPUT key as key | lookup keyLookup SUBJOBID as SUBJOBID OUTPUT key as key | transaction key

You will need to recreate this lookup when you want to perform your transaction search because new incoming data could have new JOBID's and SUBJOBID's. If you want to use this with any regularity, I'd suggest automating the creation of the lookup. You can do this by scheduling a search job that makes some creative use of inputlookup, stats and outputlookup. See this post for inspiration on creating a scheduled search that updates your lookup.

I hope this helps!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had the same idea, but i just did it all in the search, not in a lookup:

index="answer_test" sourcetype="answer_csv" | join JOBID [search index="answer_test" sourcetype="answer_csv" | stats values(SUBJOBID) as SUBJOBID by JOBID | nomv SUBJOBID | eval CUST_ID = 'JOBID'." ".'SUBJOBID' | eval CUST_ID=if(isnull(CUST_ID),'JOBID','CUST_ID')] | transaction keepevicted=true CUST_ID

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had thought about that as well. Great minds, eh? 🙂

The single search is much more elegant and has fewer moving parts. I'd much rather do it that way if possible. In the end, though, I decided to go with a lookup because of the subsearch result limits. Didn't know how many transactions OP would need to report over.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perhaps I am misunderstanding the nature of the request but you can just as easily accomplish this by doing something like this:

sourcetype="answers-1439910380" | transaction SUBJOBID | stats list(SUBJOBID) AS SUBJOBID by JOBID

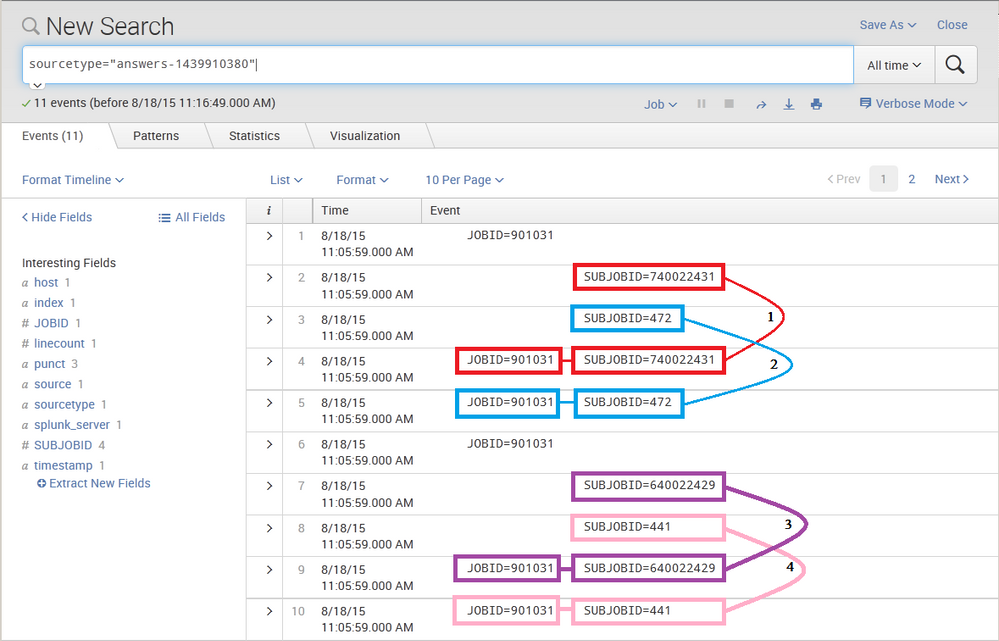

If my understanding of your question is correct, your data may look somewhat like this:

There are four meaningful records that a transaction can align. Because the events are unified with the sub-stranscation ID, you can group them (as you have already done). Now that you have four meaningful groups, you can just list the data based on the JOBID as a common denominator. Like this:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Gilberto,

Yes, perhaps there is a little misunderstanding: Your approach considers only the events which have a JOBID and a SUBJOBID. What I am searching for is one grouping of all events as listed above containing all previously evaluated fields (because I want to collect the different data fields along a transaction).

Maybe you take a look on the newly added graphic at the end of my question above. I hope this shows a little bit better what I am searching for.

Greetings Lars

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume you have events with sub_job_ids, which don't contain the job_id, but in this case you have an earlier events witch has the same sub_job_id and a Job id. If this is true, I would try a self join to have a valid Job id field in each event.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@FritzWittwer: Hi Fritz, Thank you also for your help. I tried ... | selfjoin SUBJOBID | transaction JOBID and this operation results in more than four transactions with more events from different transactions. It seems that the combination of these two commands build new relationsships between events with a special SUBJOBID and foreign JOBIDs. It does not work but thank you so much!

@Tom: Hi Tom, ... of course I know you very well 😉

Just as well filldown doesn't render the correkt transaction groups. But many thanks too!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is true.

I've tried ... | transaction JOBID, SUBJOBID mvlist=true | selfjoin JOBID keepsingle=true max=0 but this did not change the result: again see the same number of transactions per JOBID as I count different SUBJOBIDs.

If I try ... | transaction JOBID, SUBJOBID mvlist=true | selfjoin JOBID keepsingle=false max=0 there is only one result and all other JOBIDs are lost.

Both don't group the events to one single transaction per JOBID.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you have to do the selfjoin on subjobid before the transaction, e.g.:

... | selfjoin SUBJOBID | transaction JOBID

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Lars,

you might know me in person 😉

Without being able to test it, you might give filldown a try. Right in front of your transaction command use filldown JOBID | transaction...

This might fill down the empty spots of the JOBID and you can simply use | transaction JOBID to form your transaction by the JOBID.

Greetings

Tom

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If want only the events related to a single JOBID, why specify SUBJOBID?

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are events having only SUBJOBIDs which belong indirectly to the JOBID. My event data structure contains first an Event which has both IDs and then several events which are indicated by SUBJOBID only. The first event with both IDs is building the relationship between events only having the relevant JOBID and events only having the related SUBJOBIDs.