- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- _time, calculations in transactions and mvlist

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I run a transaction command to group events together, I lose the _time information originally associated with those events.

I have PLC sensor data in the form unit/unit time that I am trying to totalize over time. A previous question: http://answers.splunk.com/answers/261503/totalize-a-rate-over-time.html#answer-266956 solved the problem of how to implement a total, but I found that it didn't work for discontinuous data.

I am now trying something like this:

sensor Value># | transaction maxpause=2m maxevents=-1

which will successfully filter the events and group them into continuous runs of good data. In the set I'm working with this returns 5 transactions.

Next, I need to be able to do a trapezoidal sum on the values grouped by those transactions, with _time as the x unit. What I can't figure out is how to get the solution I was given to work with transaction data. I found some examples, but none that needed to use the _time field after applying a transaction.

If I ask mvlist to return the _time value the search breaks down and does not return the correct number of events from the transaction command.

sensor Value># | transaction maxpause=2m maxevents=-1 mvlist=_time, Value

The above does not return the correct # of events.

I am new to splunk, coming from a manufacturing and not a coding background, and trying to figure out how to get splunk to do routine tasks I already do in other programs. Any help would be much appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Given the following clarifications, I do not believe there can be a perfect solution:

There is a field which identifies the sensor, like a SensorName="Sensor A Units/Unit Time". By internal convention the units are in the name of the sensor. For now I am looking at a single sensor--still getting used to splunk. I have rate data. I am trying to calculate a total. So for example if my sensor was an endurance runner and then Value would a speed in m/sec, which updates while he is running. I am trying to take this speed and calculate how far he ran. The events are when the PLC has recorded the sensor data, and they are not evenly spaced. So I might have one event, get one 5 sec later, 4 sec later, 4 sec later, 10 sec later, etc. I only want to count miles he ran, so I will insist that the speed is above a certain threshold before I want to consider it for totaling. The method provided (in the other thread, linked in my question) for a trapezoidal sum works great if the runner is always running during the time period of interest. It breaks down if the runner stops. The distance total jumps up across the gap. I think I understand why that is -- you're just iterating through the table, so from the computer's perspective that time in between running is multiplied by whatever the rate was when he stopped and then added to the total.

However, this should work pretty well, I think (note that I reversed the events so that last does the correct thing); also be sure to change the threshholdSpeed value from 10 to whatever is proper:

sensor Value># | reverse | eval thresholdSpeed=10 | streamstats current=f last(_time) AS prevTime BY last(Value) AS prevValue BY SensorName | eval duration=_time-prevTime | eval avgSpeed=(Value+prevValue)/2 | eval avgSpeed=if(avgSpeed>=threshholdSpeed, avgSpeed, 0) | eval distance=duration*avgSpeed | stats sum(distance) AS total BY SensorName

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Given the following clarifications, I do not believe there can be a perfect solution:

There is a field which identifies the sensor, like a SensorName="Sensor A Units/Unit Time". By internal convention the units are in the name of the sensor. For now I am looking at a single sensor--still getting used to splunk. I have rate data. I am trying to calculate a total. So for example if my sensor was an endurance runner and then Value would a speed in m/sec, which updates while he is running. I am trying to take this speed and calculate how far he ran. The events are when the PLC has recorded the sensor data, and they are not evenly spaced. So I might have one event, get one 5 sec later, 4 sec later, 4 sec later, 10 sec later, etc. I only want to count miles he ran, so I will insist that the speed is above a certain threshold before I want to consider it for totaling. The method provided (in the other thread, linked in my question) for a trapezoidal sum works great if the runner is always running during the time period of interest. It breaks down if the runner stops. The distance total jumps up across the gap. I think I understand why that is -- you're just iterating through the table, so from the computer's perspective that time in between running is multiplied by whatever the rate was when he stopped and then added to the total.

However, this should work pretty well, I think (note that I reversed the events so that last does the correct thing); also be sure to change the threshholdSpeed value from 10 to whatever is proper:

sensor Value># | reverse | eval thresholdSpeed=10 | streamstats current=f last(_time) AS prevTime BY last(Value) AS prevValue BY SensorName | eval duration=_time-prevTime | eval avgSpeed=(Value+prevValue)/2 | eval avgSpeed=if(avgSpeed>=threshholdSpeed, avgSpeed, 0) | eval distance=duration*avgSpeed | stats sum(distance) AS total BY SensorName

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Progress!!! I generalized the language a bit and removed the by between the last(_time) and the last(Value). I had to do that in order to make the eval calculations work.

sensorA | reverse | eval thresholdRate=3500 | streamstats current=f last(_time) AS prevTime last(Value) AS prevValue | eval duration=(_time-prevTime) / 3600 | eval avgRate=(Value + prevValue)/2 | eval avgRate=if(avgRate>=thresholdRate, avgRate, 0) | eval subtotal=duration*avgRate | streamstats sum(subtotal) as total | timechart span=5m max(total) as total_lbs avg(avgRate) as rate_lbs_per_hr

I used some of the code from my previous question to make a plot over time as well.

Thanks for your persistence in understanding what I was after and your help on implementation! I really appreciate it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you ask the question better, you get better answers. If this does work, then click "Accept" to close it out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If your data is indeed "discontinuous" then you will be most dissatisfied with the performance (failure) of transaction. Give us sample data and current search with its output and then describe the desired output (as mocked up from your sample data). That is the only way that you are going to get a good answer to this question.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not able to share sample data (industry, not a personal project). The data I am looking at come in from a sensor interfacing with Splunk in real time. The data in unit/unit time is stored in a field called Value, and the time information is stored in the standard _time field.

I am trying to (correctly) totalize or sum up total units within a window of time using the rate data. Put another way, I'm trying to use splunk to do numerical integration.

I described what I am seeing in text as best as I could below.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, then share some fields. How do you know that an event is from a particular sensor; is it that they have the same host value or something else? Genericize one event and then tell us what fields are in it. It is not reasonable to expect a specific answer without more specific details.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The fields like source, host, etc are all the same as all the data is coming from one single server. So for most of what I'm trying to do it's not relevant. There is one field which uniquely identifies the data's origin and units, which I generalized to sensor in my code examples.

The primary field of interest is Value, which contains the data from the sensor, which is always in the same format of unit/unit time.

The time field and date* fields describe the time that the data was logged. It's not a fixed rate in between points.

Does that help enough?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So you only have a single sensor then? That seems very strange and unlikely to be a stable situation (as soon as you get something good, you are probably going to be asked to do the same thing with more sensors). The reason I am asking is that you probably need to ditch transaction and use stats but we need a correlating field with which to work. In any case, if this is all you specify, then I cannot help.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you provide a link to documentation or a use example of a correlating field?

Or an example of how stats would work when one does have a correlating field to work with?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just mean how do you say which events are for which sensor. Let's back up; what exactly are you trying to calculate? Are you trying to find gaps in your continuity where the sensor is not sending? Back all the way back up and start from scratch and I am sure there is a way to do what you need without transaction.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a field which identifies the sensor, like a SensorName="Sensor A Units/Unit Time". By internal convention the units are in the name of the sensor.

For now I am looking at a single sensor--still getting used to splunk.

I have rate data. I am trying to calculate a total. So for ex. if my sensor was an endurance runner and then Value would a speed in m/sec, which updates while he is running. I am trying to take this speed and calculate how far he ran. The events are when the PLC has recorded the sensor data, and they are not evenly spaced. So I might have one event, get one 5 sec later, 4 sec later, 4 sec later, 10 sec later, etc.

I only want to count miles he ran, so I will insist that the speed is above a certain threshold before I want to consider it for totaling.

The method provided (in the other thread, linked in my question) for a trapezoidal sum works great if the runner is always running during the time period of interest. It breaks down if the runner stops. The distance total jumps up across the gap. I think I understand why that is -- you're just iterating through the table, so from the computer's perspective that time in between running is multiplied by whatever the rate was when he stopped and then added to the total.

I'm just not sure how to implement a solution that addresses that problem.

Does that help?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Edit:

What about something like this?

sensor Value>#

| eval time = _time

| delta value as valueDelta

| delta time as timeDelta

| table _time, time, timeDelta, Value, valueDelta

| eval area = time_delta * (Value + valueDelta)/2

| stats sum(area) as total

You could also try adding this after eval instead:

| bucket _time span=5m

| stats sum(area) by _time

if you needed it in more specific time chunks.

Can you try capturing your time in a separate field, and then allowing the _time to stay internal?

sensor Value>#

| eval time = _time

| transaction maxpause=2m maxevents=-1

Alternatively, you could try capturing some subset of the time information for the mvlist, for example, minutes:

sensor Value>#

| eval some_unit_of_tine = strftime(_time, "%m")

| transaction maxpause=2m maxevents=-1

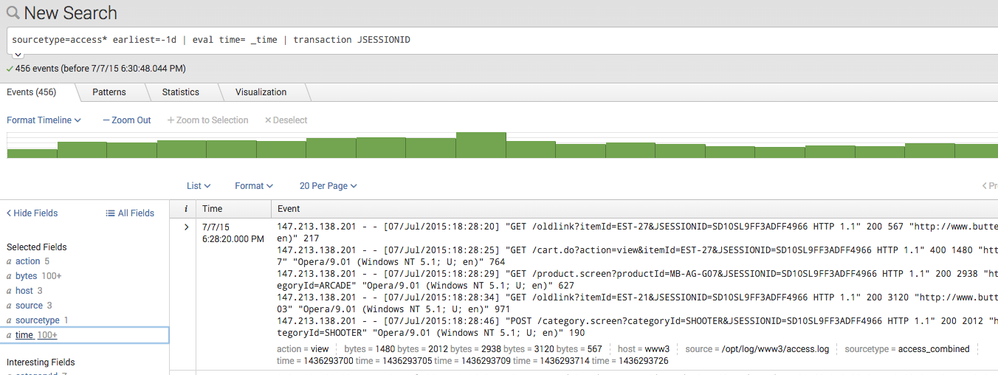

Here is an example screenshot, where I am grouping on the JSESSIONID field, but you'll see I have access to both bytes & time (for each event) and don't need to use mvlist.

Read more on common time options here and the eval command here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I do this, the transaction search I am using breaks down.

sensor Value>#

returns 18,840 events in the specified time range

Adding

sensor Value># | eval time = _time | transaction maxpause=2m maxevents=-1

returns 5 transactions (representing 5 segments of continuous rate data)

Adding

sensor Value># | eval time = _time | transaction maxpause=2m maxevents=-1 mvlist=Value,time

returns 18,840 events, with no information from the transactions retained.

The totalizing search runs exactly as before with the aforementioned discontinuous jumps.

sensor Value>#| eval time = _time | transaction maxpause=2m maxevents=-1 mvlist=Value,time | reverse

| streamstats last(Value) as lastValue last(time) as lastTime current=f window=1

| eval area=(time - lastTime)*(Value+lastValue)/2

| streamstats sum(area) as total

| timechart span=5m max(total) as total

I will see if I can find a way to post an image. We have a lot of sites blocked on our internal network.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see.

So given the output of

sensor Value># | eval time = _time | transaction maxpause=2m maxevents=-1

Do you not have access to the individual time and Value values? You should be able to see all of them without using mvlist.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can see Value in the fields list but not _time. If I count _time I get a # of values equal to the number of transactions, 1 _time per transaction.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you try using the time (in contrast with _time) that you created with eval? You should have multiple time values PER transaction.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes! Now I do! I had to go into the fields manual and manually select time there.

Is there a command that will iterate through the transactions? Now that I can see the data I should be able to do the trapezoidal sum using the time and Value data in each of the transactions.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, after you have access to the values you need, you can iterate through all of them, which are now unique on the _time field.

It sounds like you really just want a sum - since you have one Value value per time value, right ?

That would be as simple as something like:

... | stats sum(Value) as sum by _time

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

edit: just saw your comment. I need more than just a sum since my data is a rate, and does not arrive at a fixed interval. If it was unit/sec and I got one event every 5 sec I could do it with a sum like you suggest, but my data is not quite that nice.

Here's the code from the other thread that acharlieh (is there a way to link usernames?) provided:

sensor | table time Value | reverse

| streamstats last(Value) as lastValue last(time) as lastTime current=f window=1

| eval area=(time - lastTime)*(Value+lastValue)/2

| streamstats sum(area) as total

| timechart span=5m max(total) as total

I'm still not quite sure how to mash these two bits of code together. I tried starting at the streamstats command and adding a by _time

like so:

sensor Value># | eval time=_time | transaction maxpause=2m maxevents=-1

| streamstats by _time last(Value) as lastValue last(time) as lastTime current=f window=1

| eval area=(time - lastTime)*(Value+lastValue)/2

| streamstats sum(area) as total

| timechart span=5m max(total) as total

but this generates an error Error in 'streamstats' command: Repeated group-by field 'as'.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ErickaE you just add the @ sign to do an "at-mention" to link the username.

The error is coming from your by-clause in your first streamstats being in the wrong place (must come at the end).

I don't think the streamstats approach is going to work - because the last function only returns one number per transaction (where you need it per event within the transaction). You'd need to table out the values to get them in the table format (they're in the event format when they're spit out of transaction). Then you'd need to make them single-valued rather than multivalued (transaction is pushing all those values into one field)

Look at the data at this point:

sensor Value># | eval time=_time | transaction maxpause=2m maxevents=-1

Notice you're in the events tab.

Look at the data at this point:

sensor Value># | eval time=_time | transaction maxpause=2m maxevents=-1

| table _time time bytes

Notice you're in the statistics tab.

sensor Value># | eval time=_time | transaction maxpause=2m maxevents=-1

| table _time time bytes

| mvexpand bytes

| mvexpand time

Now you're getting back to the output from that other answer... and its at this point that you could then add the streamstats (with the by at the end), eval, streamstats, and timechart.

Having to do this much work though in general to get a delta & some simple math makes me, in addition to woodchuck, wonder if you really need to use the transaction command.

Is there any other field you can group by in order to avoid having to use transaction?